Visual Perception of Contours, Surfaces, and Objects: Basic Research and Modeling

A central focus of our lab is discovering the processes and mechanisms involved in the visual perception of objects. We use psychophysical research and computational modeling in this work. Much of our research has focused on attaining a comprehensive understanding of how objects, contours, and surfaces are perceived from information that is fragmentary in space and time. We are also deeply interested in visual perception and representation of shape. (See separate topic description on Shape.)

Contour and Surface Interpolation Processes in Object Formation

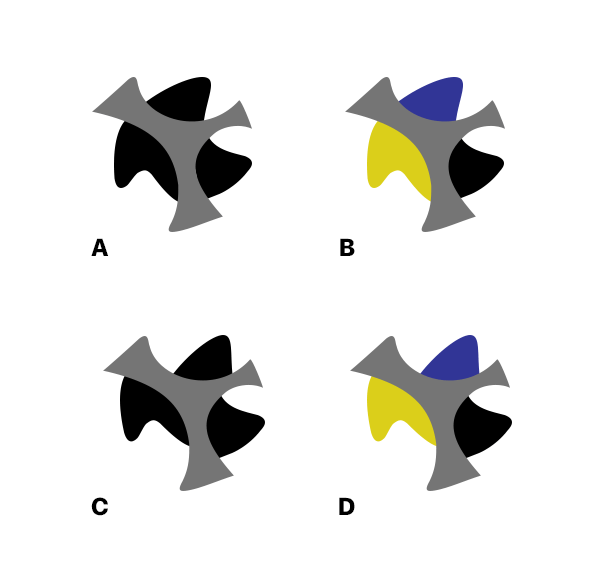

Our research has suggested that two complementary processes support perception of objects from fragmentary visual information. Contour interpolation processes depend on spatial relations of visible edge segments, and surface interpolation processes connect spatially separated visible regions based on similarity of surface properties. In the figure below, the fragments separated by the gray occluding object are linked by both contour and surface interpolation in (A); by contour interpolation only, in (B); by surface interpolation only, in (C), and by neither interpolation process in D.

Contour and Surface Interpolation. (A) Both contour and surface interpolation processes contribute to perceived unity of the three black regions behind the gray occluder. (B) Contour interpolation alone. (C) Surface interpolation alone. (D) Both contour and surface interpolation have been disrupted, causing the blue, yellow, and black regions to appear as three separate objects.

Our understanding of both processes is advancing in current projects. These include new work that supports a two-stage theory of contour interpolation. A number of discrepant phenomena and controversies in the field can be understood by a model that posits an initial, “promiscuous” contour linking stage, followed by subsequent processing that implements a variety of scene constraints ensuring the consistency of border ownership, closure, and appearance of crossing interpolations in determining perceived objects. The paradox of some difficult to understand object formation phenomena is that perception of unified objects depends both on a highly automated, geometrically constrained, and “promiscuous” contour-linking process, and on a second stage that reinforces, weakens, or even deletes contour connections from Stage 1 in the final scene representation. One weakness of this general account in the past has been the lack of a clear method for quantifying effects in Stage 2 and modeling their interactions. Recent work underway in our lab is beginning to clarify this picture greatly and provide clear support the overall account of a two-stage process.

Neural Modeling of Visual Interpolation Processes

We have implemented a neural-style model of the basic contour linking process in contour interpolation (Kalar, et al, 2010, Vision Research). This model draws heavily on earlier work by Heitger et al (1992, 1998) but shows how contour interpolation in the basic contour linking stage works from a common mechanism in both illusory contour and occluded contour contexts.

Current efforts in modeling involve two major efforts:

1) Developing a Two-Process Theory of Contour Interpolation

Whereas the first stage of contour linking in contour interpolation likely involves a modular perceptual mechanism based on scene geometry, the second stage may involve satisfaction of multiple constraints, applications of priors, and combinations of factors of differing weights. These different approaches to modeling perceptual phenomena may come together in a two-process theory. A weakness to date is that there has not been much systematic quantification of second-stage constraints or their interactions, owing in part to the lack of good methods for isolating the second stage. In current work, we are developing successful methods for doing so and have begun to quantify scene constraints in contour interpolation and model their interactions.

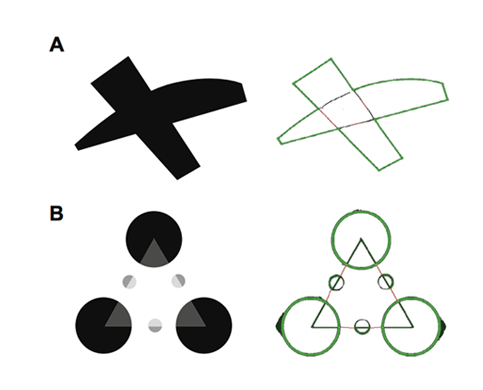

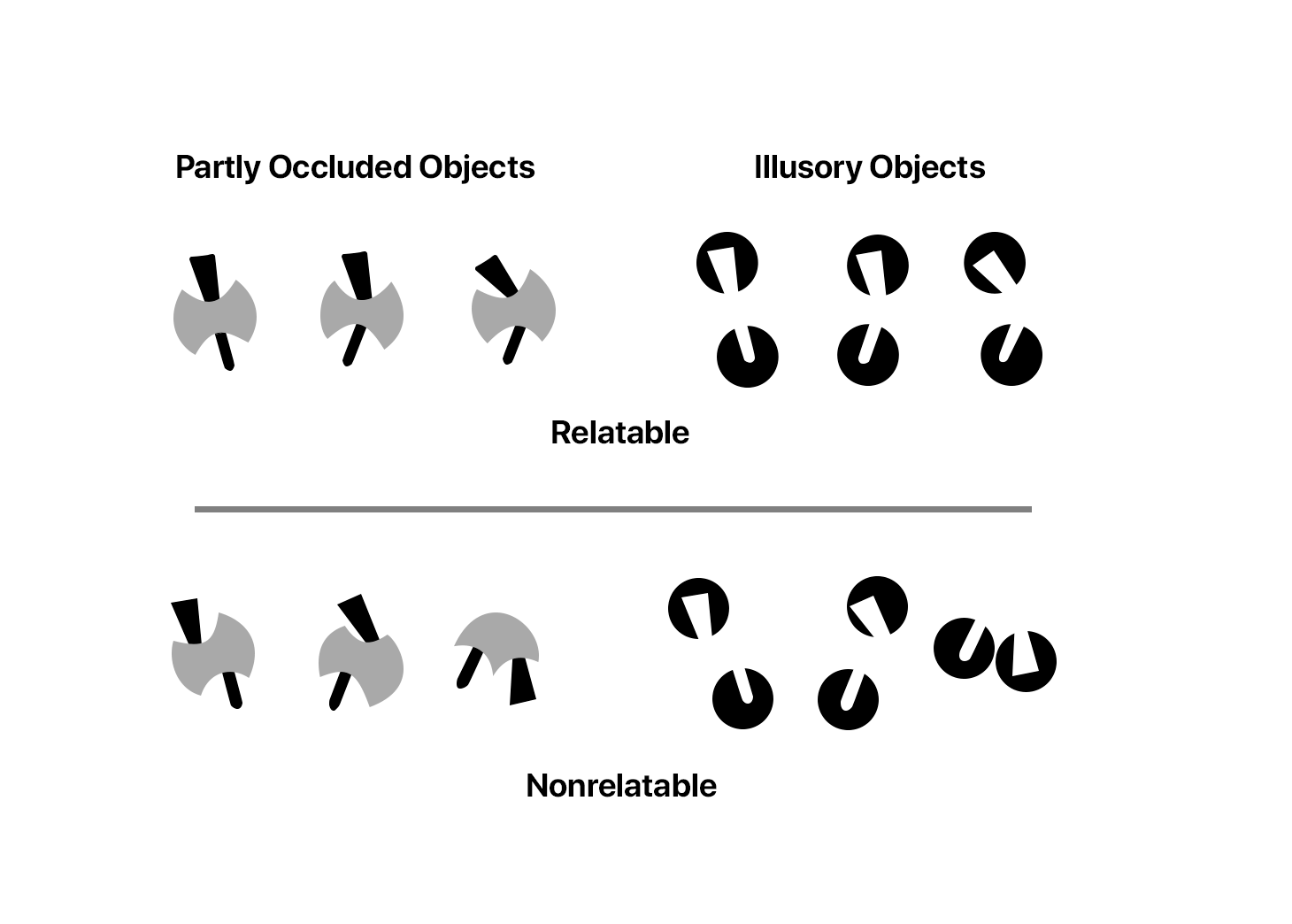

Graphical examples of contour relatability, which expresses the underlying geometry of contour interpolation in both amodal and modal completion. Top Row: Relatable edges produce amodal completion of the visible black regions (left column), and for the same physically-specified edges, illusory contours in the right column. Bottom Row: Disruption of contour relatability leads to perception of separate fragments (left) and the absence of illusory contours (right).

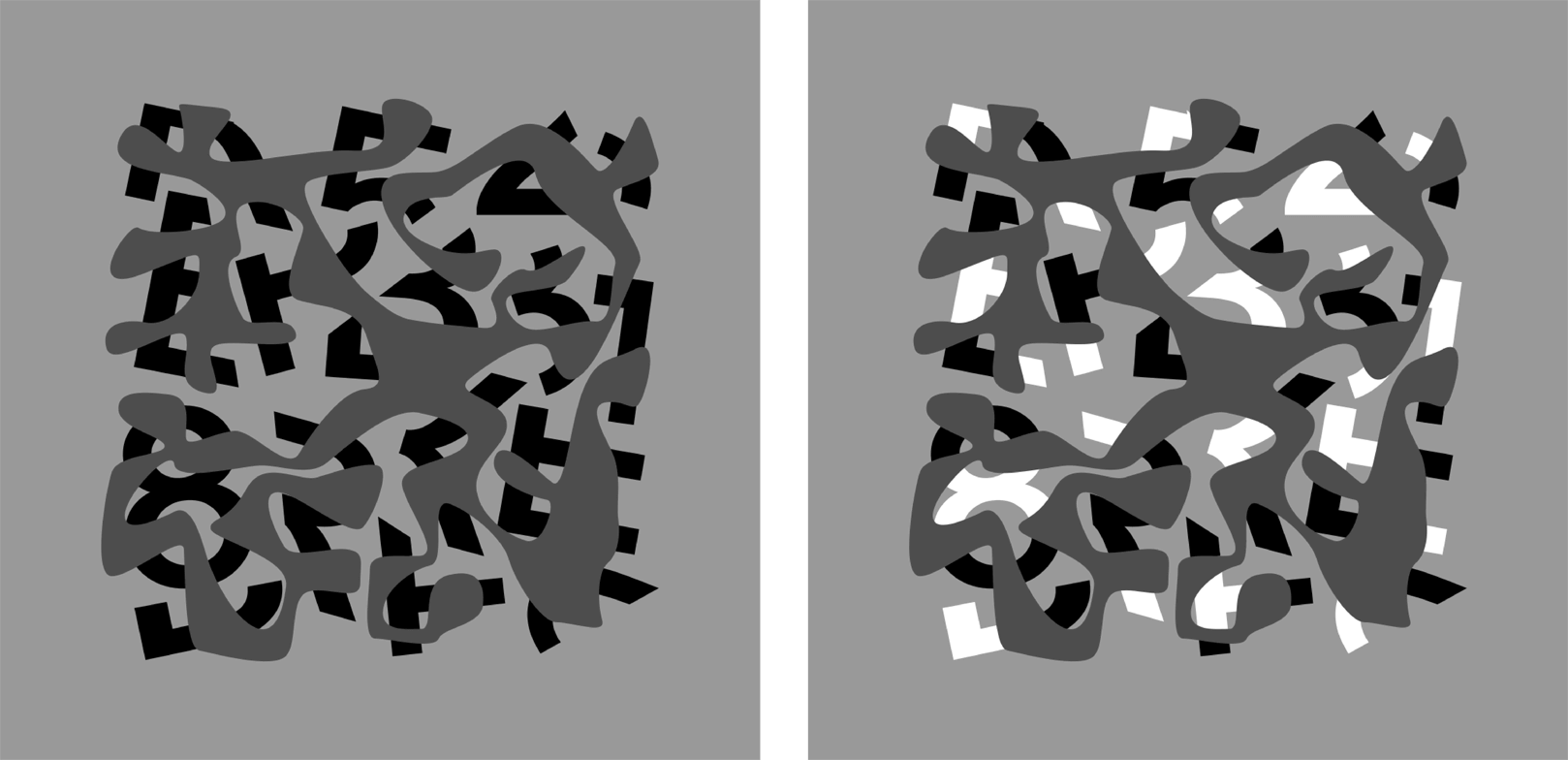

Using Character Recognition to Assess Outputs of the Second Stage of Contour Interpolation. Displays of the sort shown above, which derive from a classic illustration by Bregman (1991), used in a speeded performance task, may allow access to the second stage of contour interpolation due to the access of recognition processes to scene descriptions generated there. The methods allow quantification of the strength of particular scene constraints, alone and in combination. In the example shown, opposite contrast polarity for relatable fragments is shown (c.f. Su, He & Ooi, 2010).

2) Bridging Subsymbolic Activations and Symbolic Descriptions in Vision

A major objective of continuing work is to bridge the subsymbolic and symbolic aspects of visual processing. Whereas early cortical processing involves many contrast-sensitive units with local receptive fields, perceptual descriptions of objects and their shapes requires more configural and symbolic descriptions. Most research in vision works on one side or the other of this divide. For example, work on neural-style models of contour interpolation (e.g., Heitger et al, 1998; Kalar et al, 2010) uses spatially parallel distributed operators to indicate interpolation “activation” at various locations; their outputs are typically images in which the human observer can see, for example, the illusory contour connections between inducing elements. However, the contours in these models are really just sets of points of activations. The model does not have any representation of contour tokens, their shapes, whether contours close, etc. Arguably, human perception has all of these symbolic descriptions, and more. We are working on rigorously specified, next-generation models that go from natural images to descriptions of the completed contours, whole objects, and shapes in scene descriptions gotten from seeing.

3D and Spatiotemporal interpolation

Our lab and collaborators have made pioneering contributions in the study of contour and surface interpolation in object formation as 3D and spatiotemporal processes (Kellman, 1984, Perception & Psychophysics; Kellman, Garrigan & Shipley, 2005, Psych. Review; Palmer, Kellman & Shipley, 2006, JEP: General; Fantoni, Gerbino, & Kellman, 2008, Vision Research). We also discovered, characterized, and developed models of the related process of spatiotemporal boundary formation (SBF); see Shipley & Kellman, 1994, JEP: General; Erlikhman & Kellman, 2014, Frontiers in Human Neuroscience; 2015, Vision Research; 2016, Frontiers in Psychology. Some demos from this work can be seen under the Shape section of this website. Research efforts continue to further advance these areas, often with a focus on the core underlying processes that unify 2D, 3D and spatiotemporal object formation.

Researchers

-

Philip J. Kellman

Philip J. Kellman -

Everett Mettler

Everett Mettler -

Suzy Carrigan

Suzy Carrigan -

Nicholas Baker

Nicholas Baker

Collaborators

-

Gennady Erlikhman

Gennady Erlikhman -

Jennifer Mnookin (UCLA)

Jennifer Mnookin (UCLA) -

Itiel Dror (UCL)

Itiel Dror (UCL) -

Hongjing Lu (UCLA)

Hongjing Lu (UCLA) -

Patrick Garrigan (SJU)

Patrick Garrigan (SJU) -

Brian Keane (Rutgers)

Brian Keane (Rutgers) -

Tandra Ghose (TU Kaiserslautern)

Tandra Ghose (TU Kaiserslautern)

Selected Publications

- Kellman, P. J., & Fuchser, V. (2023). Visual completion and intermediate representations in object formation. To appear in A. Mroczko-Wąsowicz and R. Grush (Eds.), Sensory Individuals: Unimodal and Multimodal Perspectives (pp. 55-76). NY: Oxford University Press.

- Phillips, A., Erlikhman, G., Fuchser, V. & Kellman, P. J. (in preparation). Path integration and illusory contours: Evidence for an intermediate representation in visual contour interpolation.

- Baker, N., & Kellman, P. J. (2024). Shape from dots: a window into abstraction processes in visual perception. Frontiers in Computer Science, 6, 1367534.

- Kellman, P. J., Baker, N., Garrigan, P., Phillips, A., & Lu, H. (2023). For deep networks, the whole equals the sum of the parts. Behavioral and Brain Sciences, 46, e396.

- Baker, N. & Kellman, P.J. (2023). Independent mechanisms for processing local contour features and global shape. Journal of Experimental Psychology: General. 152(5), 1502–1526.

- Baker, N., Garrigan, P., Phillips, A., & Kellman, P. J. (2023). Configural relations in humans and deep convolutional neural networks. Frontiers in Artificial Intelligence, 5, 961595.

- Mroczko-Wasowicz, A., O'Callaghan, C., Cohen, J., Scholl, B., & Kellman, P. J. (2023). Advances in the study of visual and multisensory objects. In Proceedings of the Annual Meeting of the Cognitive Science Society (Vol. 45), 32-33.

- Baker, N. & Kellman, P. J. (2021). Constant curvature modeling of abstract shape representation. PLOS ONE, 16(8): 30254719

- Baker, N., Garrigan, P. & Kellman, P. J. (2020). Constant curvature segments as building blocks of 2D shape representation. Journal of Experimental Psychology: General, doi.org/10.1037/xge0001007.

- Baker, N., Lu, H., Erlikhman, G., & Kellman, P.J. (2020). Local features and global shape information in object classification by deep convolutional neural networks. Vision Research, 172, 46-61. doi: 10.1016/j.visres.2020.04.003

- Baker, N., Lu, H., Erlikhman, G. & Kellman, P.J. (2018). Deep convolutional networks do not classify based on global object shape. PLOS: Computational Biology, https://doi.org/10.1371/journal.pcbi.1006613.

- Baker, N., Kellman, P.J., Erlikhman, G. & Lu, H. (2018). Deep convolutional networks do not perceive illusory contours. In T.T. Rogers, M. Rau, X. Zhu, & C. W. Kalish (Eds.), Proceedings of the 40th Annual Conference of the Cognitive Science Society (pp. 1310-1315). Austin, TX: Cognitive Science Society.

- Baker, N. & Kellman, P.J. (2018). Abstract shape representation in human visual perception. Journal of Experimental Psychology: General, 147(9), 1295-1308. doi: 10.1037/xge0000409

- Palmer, E. M., & Kellman, P. J. (2017). The aperture capture illusion. In D. Todorovic & A. G. Shapiro (Eds.), Oxford compendium of visual illusions. NY: Oxford University Press.

- Carrigan, S. B., Palmer, E. M., & Kellman, P. J. (2016). Differentiating global and local contour completion using a dot localization paradigm. Journal of Experimental Psychology: Human Perception and Performance. Advanced online publication. doi: 10.1037/xhp0000233

- Erlikhman, G., & Kellman, P. J. (2016). From flashes to edges to objects: Recovery of local edge fragments initiates spatiotemporal boundary formation. Frontiers in Psychology, 7, 910.

- Erlikhman, G., & Kellman, P. J. (2015). Modeling spatiotemporal boundary formation. [Special issue on quantitative approaches in Gestalt perception]. Vision Research, 126, 131–142.

- Erlikhman, G., Xing, Y. Z., & Kellman, P. J. (2014). Non-rigid illusory contours and global shape transformations defined by spatiotemporal boundary formation. Frontiers in Human Neuroscience, 8, 1-13.

- Ghose, T., Liu, J., & Kellman, P. J. (2014). Recovering metric properties of objects through spatiotemporal interpolation. Vision Research, 102, 80-88.

- Kellman, P. J., Mnookin, J., Erlikhman, G., Garrigan, P., Ghose, T., Mettler, E., Charlton, D. & Dror, I. E. (2014). Forensic comparison and matching of fingerprints: Using quantitative image measures for estimating error rates through understanding and predicting difficulty. PLoS ONE, 9(5), e94617.

- Palmer, E., & Kellman, P. J. (2014). The aperture capture illusion: Misperceived forms in dynamic occlusion displays. Journal of Experimental Psychology: Human Perception and Performance, 40(2), 502-524.

- Kellman, P.J., Garrigan, P., & Erlikhman, G. (2013). Challenges in understanding visual shape perception and representation: Bridging subsymbolic and symbolic coding. In S.J. Dickinson & Z. Pizlo (Eds.), Shape Perception in Human and Computer Vision: An Interdisciplinary Perspective (249-274). London: Springer-Verlag.

- Erlikhman, G., Keane, B. P., Mettler, E., Horowitz, T. S., & Kellman, P. J. (2013). Automatic feature-based grouping during multiple object tracking. Journal of Experimental Psychology: Human Perception and Performance, 39(6), 1625-1637.

- Keane, B.P., Lu, H., Papathomas, T.V., Silverstein, S.M., & Kellman, P.J. (2013) Reinterpreting behavioral receptive fields: Lightness induction alters visually completed shape. PLoS ONE 8(6), e62505. doi:10.1371/journal.pone.0062505

- Erlikhman, G., Keane, B.P., Mettler, E., Horowitz, T.S., & Kellman, P.J. (2013). Automatic feature-based grouping during multiple object tracking. Journal of Experimental Psychology: Human Perception and Performance. Advanced online publication. doi: 10.1037/a0031750

- Keane, B. P., Lu, H., Papathomas, T. V., Silverstein, S. M., & Kellman, P. J. (2012). Is interpolation cognitively encapsulated? Measuring the effects of belief on Kanizsa shape discrimination and illusory contour formation. Cognition, 123(3), 404-418.

- Garrigan, P., & Kellman, P. J. (2011). The role of constant curvature in 2-D contour shape representations. Perception, 40(11), 1290-1308.

- Keane, B. P., Mettler, E., Tsoi, V., & Kellman, P. J. (2011). Attentional signatures of perception: Multiple object tracking reveals the automaticity of contour interpolation. Journal of Experimental Psychology: Human Perception and Performance, 37(3), 685-698.

- Kalar, D. J., Garrigan, P., Wickens, T. D., Hilger, J. D., & Kellman, P. J. (2010). A unified model of illusory and occluded contour interpolation. Vision Research, 50(3), 284-299.

- Unuma, H., Hasegawa, H., & Kellman, P. J. (2010). Spatiotemporal integration and contour interpolation revealed by a dot localization task with serial presentation paradigm. Japanese Psychological Research, 52(4), 268-280.

- Ghose, T., Liu, J. & Kellman, P. J. (2010). Measuring size of a never present object: Visual object formation through spatiotemporal interpolation. In A. Bastianelli et al. (Eds.) Proceedings of the International Society for Psychophysics, (pp. 171-174). Society for Psychophysics.

- Kellman, P. J., Garrigan, P., & Palmer, E. M. (2010). 3D and spatiotemporal interpolation in object and surface formation. In C. W. Tyler (Ed.), Computer vision: From surfaces to 3D objects (pp. 183-207), Boca Raton, FL: Chapman & Hall/CRC.

- Fantoni, C., Hilger, J., Gerbino, W. & Kellman, P. J. (2008). Surface interpolation and 3D relatability. Journal of Vision, 8(7), 1-19.

- Keane, B. P., Lu, H., & Kellman, P. J. (2007). Classification images reveal spatiotemporal contour interpolation. Vision Research, 47(28), 3460-3475.

- Kellman, P. J., Garrigan, P., Shipley, T. F., & Keane, B. P. (2007). Postscript: Identity and constraints in models of object formation. Psychological Review, 114(2), 502-508.

- Kellman, P. J., Garrigan, P., Shipley, T. F., & Keane, B. P. (2007). Interpolation processes in object perception: Reply to Anderson (2007). Psychological Review, 114(2), 488-502.

- Kellman, P. J., & Garrigan, P. (2007). Segmentation, grouping, and shape: Some Hochbergian questions. In M. A. Peterson, B. Gillam, & H. A. Sedgwick, (Eds.) In the mind's eye: Julian Hochberg on the perception of pictures, films, and the world. NY: Oxford University Press.

- Palmer, E. M., Kellman, P. J., & Shipley, T. F. (2006). A theory of dynamic occluded and illusory object perception. Journal of Experimental Psychology: General, 135, 513-541. [Awarded 2007 American Psychological Association prize to E.M. Palmer for best paper published in JEP: General by a young investigator.]

- Kellman, P. J., Garrigan, P. & Shipley, T. F. (2005). Object interpolation in three dimensions. Psychological Review, 112, 3, 586-609.

- Kellman, P. J., Garrigan, P., Shipley, T. F., Yin, C., & Machado, L. (2005). 3-d interpolation in object perception: Evidence from an objective performance paradigm. Journal of Experimental Psychology: Human Perception and Performance, 31(3), 558-583.

- Guttman, S. E., & Kellman, P. J. (2004). Contour interpolation revealed by a dot localization paradigm. Vision Research, 44(15), 1799-1815.

- Guttman, S. E., Sekuler, A. B., & Kellman, P. J. (2003). Temporal variations in visual completion: A reflection of spatial limits? Journal of Experimental Psychology: Human Perception and Performance, 29(6), 1211-1227.

- Shipley, T. F., & Kellman, P. J. (2003). Boundary completion in illusory contours: Interpolation or extrapolation?Perception, 32(8), 985-999.

- Kellman, P. J. (2003). Interpolation processes in the visual perception of objects [Special issue]. Neural Networks, 16(5-6), 915-923.

- Kellman, P. J. (2003). Visual perception of objects and boundaries: A four-dimensional approach. In R. Kimchi, M. Behrmann, & C. R. Olson (Eds.), Perceptual Organization in Vision: Behavioral and Neural Perspectives: The 31st Carnegie Symposium on Cognition (pp. 155-201). Mahwah, NJ: Erlbaum.

- Kellman, P. J. (2002). Vision: Occlusion, illusory contours and 'filling in'. In L. Nadel (Ed.), Encyclopedia of Cognitive Science. New York: Nature Publishing Group.

- Kellman, P. J., Guttman, S. E, & Wickens, T. D. (2001). Geometric and neural models of object perception. In T. F. Shipley & P. J. Kellman (Eds.), From fragments to objects: Segmentation and grouping in vision (pp. 183-245). Amsterdam: Elsevier Science.

- Yin, C., Kellman, P. J., & Shipley, T. F. (2000). Surface integration influences depth discrimination. Vision Research, 40(15), 1969-1978.

- Kellman, P. J. (2000). An update on Gestalt Psychology. In B. Landau, J. Sabini, J. Jonides, & E. L. Newport (Eds.), Essays in honor of Henry and Lila Gleitman (pp. 157-190). Cambridge, MA: MIT Press.

- Cunningham, D. W., Shipley, T. F., & Kellman, P. J. (1998). The dynamic specification of surfaces and boundaries. Perception, 27(4), 403-415.

- Cunningham, D. W., Shipley, T. F., & Kellman, P. J. (1998). Interactions between spatial and spatiotemporal information in spatiotemporal boundary formation. Perception & Psychophysics, 60(5), 839-851.

- Kellman, P. J., Yin, C., & Shipley, T. F. (1998). A common mechanism for illusory and occluded object completion. Journal of Experimental Psychology: Human Perception and Performance, 24(3), 859-869.

- Yin, C., Kellman, P. J., & Shipley, T. F. (1997). Surface completion complements boundary interpolation in the visual integration of partly occluded objects. Perception, special issue on surface appearance, 26(11), 1459-1479.

- Kellman, P. J. (1997). From chaos to coherence: How the visual system recovers objects. Psychological Science Agenda of the American Psychological Association, 10(4), 8-9.

- Shipley, T. F., & Kellman, P. J. (1994). Spatiotemporal boundary formation: Boundary, form, and motion from transformations of surface elements. Journal of Experimental Psychology: General , 123, 1, 3-20.

- Shipley, T. F., & Kellman, P. J. (1993). Optical tearing in spatiotemporal boundary formation: When do local element motions produce boundaries, form, and global motion? Spatial Vision, 7(4), 323-339.

- Kellman, P. J. & Shipley, T. F. (1992). Visual interpolation in object perception. Current Directions in Psychological Science, 1(6), 193-199.

- Shipley, T. F., & Kellman, P. J. (1992). Strength of visual interpolation depends on the ratio of physically specified to total edge length. Perception & Psychophysics, 52(1), 97-106.

- Shipley, T. F., & Kellman, P. J. (1992). Perception of partly occluded objects and illusory figures: Evidence for an identity hypothesis. Journal of Experimental Psychology: Human Perception and Performance, 18(1), 106-120.

- Kellman, P. J. & Shipley, T. (1991). A theory of visual interpolation in object perception. Cognitive Psychology, 23, 141-221.

- Shipley, T. F., & Kellman, P. J. (1990). The role of discontinuities in the perception of subjective contours. Perception & Psychophysics, 48(3), 259-270.

- Kellman, P. J., & Loukides, M. G. (1987). An object perception approach to static and kinetic subjective contours. In S. Petry & G. E. Meyer (Eds.), The perception of illusory contours (pp. 151-164). New York: Springer-Verlag.